As part of my school's faculty development program, we're asked to observe a teacher of our choice outside our department. I asked to watch John Amos's English class. I chose him because I knew him to be an outstanding, experienced, creative, intelligent teacher. More to the point, he teaches 9th grade like I do, but his style and personality are very, very different from mine. I thought it would be useful to see how another craftsman uses a different skill set to achieve the same general goals.

So many "Physics Educators," and "educators" in general, have the Soviet attitude that if only everyone did things my way, students would learn better. I disagree.

I've always been open at my workshops and on this blog: my ideas, philosophies, and suggestions are mine alone, developed in the context of my personal strengths and weaknesses, and shaped by the ecosystems of the three schools at which I've taught. What I do cannot work for everyone. Yet, it's still worth sharing my thoughts, techniques, and ideas. Not in the sense of "do these things and you will become a great physics teacher;" but rather, "here are a few ideas you may not have considered; try them, and then either throw them out or adjust them to make them your own."

So here's my extensive reaction to John's class. I will not be adopting wholesale any of his particular techniques; but I appreciate the exposure to some different ways of approaching my craft. Many of John's ideas are in the back of my brain now, ready to manifest -- consciously or subconsciously -- in my own classes. In other words, I bought myself a few new tools. Whether and how I use them is discourse for a future time.

What happened in the class?

John included three or four segments in a 45 minute class:

1. Discussion of vocabulary words

2. Discussion of previous night's chapter in Bradbury's The Martian Chronicles

3. Instruction about responding to passage identification questions

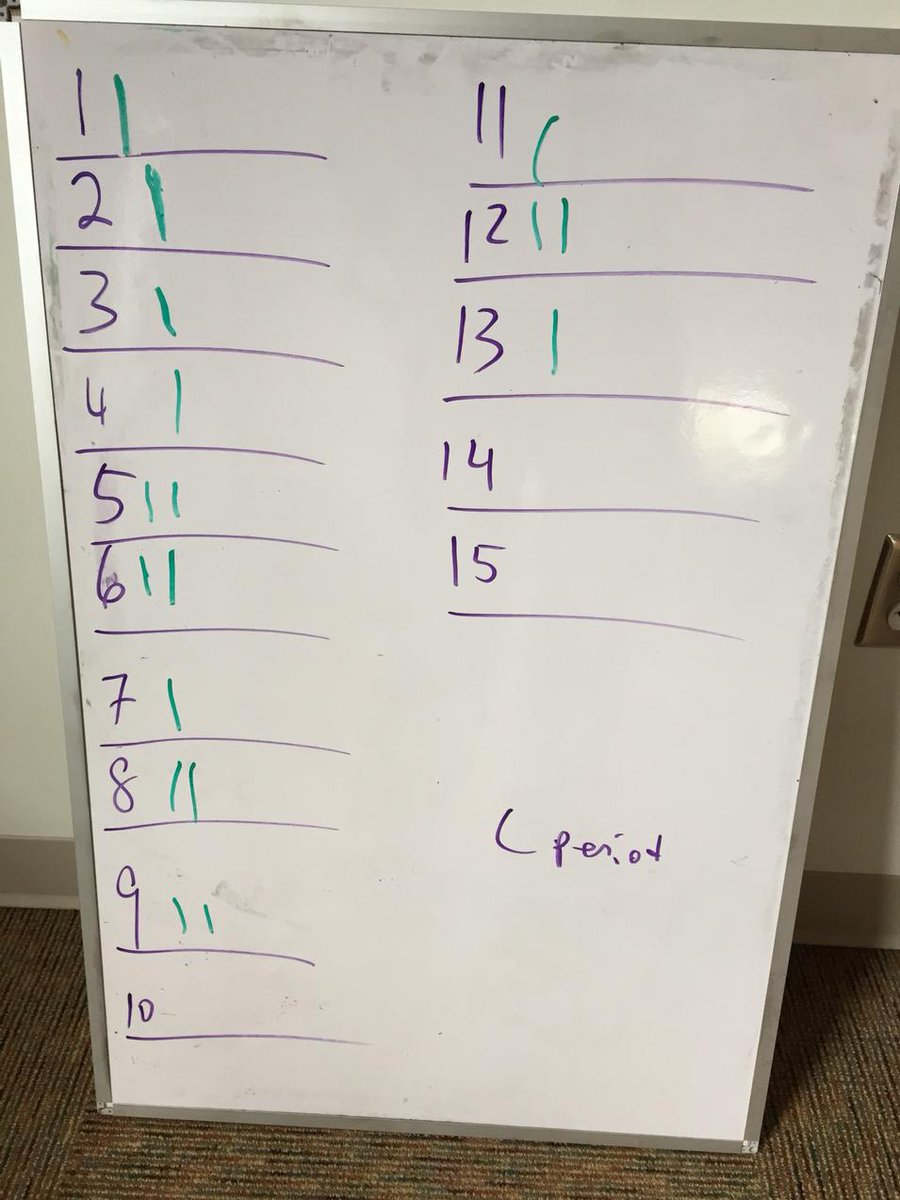

4. Practice responding to passage identification questions

This was a 9th grade general English class that included many of the same students I've worked with this year.

My reaction:

I always thought I hated kibbe.* I dreaded when my mom tried to make it. Around age 30, I realized that I liked kibbe just fine -- what I hated was my mom's cooking of the kibbe.**

*Kibbe is a Lebanese dish with bulgur wheat, ground meat, onions, and spices.

**Mom's laham mishwe (little bites of spiced lamb and onions) is wonderful. Don't tell her I said anything about the kibbe.

Similarly, my personal experience with the classroom study of writing and literature has been universally negative. I've always been aware that it's been the poor teaching and poor classroom atmosphere that turned me off to English, not the discipline of English itself. But John's class brought the source of my negative reaction to the forefront. Last week, I watched a master at work.

I've advocated to physics teachers that we give a short quiz at the beginning of every class. The purpose is as much to settle the class down as to use the quiz as a device for review. John likewise recognizes the necessity for a start-of-class routine, but does it differently. He throws vocabulary words, the ones that will be on the upcoming test, up on the screen. As soon as the first boy arrives, he begins a relaxed, informal discussion about the words. Thus, even the boy who is second to arrive feels he's joining class in media res, and so gets his materials settled and ready for business right away. John's vocabulary discussion -- which is interesting and captivating anyway -- serves the same teaching purpose as my quiz, but is better suited to John's personality than mine.

It almost goes without saying that we moved through four activities before any of the four had a chance to get old or stale. This class could easily have gone on for 90 minutes. Like the best entertainers, John left the class wanting more -- better 5 minutes too short than 1 minute too long.

Now, part of what made the class great was the enthusiastic and substantive participation of the students. Most, including some I know not to be A+ students, jumped in with excellent and interesting things to say, listening to each other and advancing the conversation. It helped that we weren't reading Jane Eyre, we were reading about Martians. Most of the class was invested in the book, and in the class discussion; those few who weren't sat quietly, listening, without causing distraction. Those who participated did so authentically, never playing a game of one-upsmanship, never ignoring a classmate's comment.

I'm well aware that John's done considerable behind-the-scenes work over the first half of the year to set up the class I saw. At some point he's had to assert himself as alpha dog. For example:

One student -- he's from Vietnam, and in my AP physics class -- put forth some ideas which were initially confusing to the class, and even to me and John. The confusion came from many sources -- his language barrier and accent made it tough to follow him. This student's general intellectual level is well beyond that of most of the class, so his thoughts were more complex than we had yet considered. The class discussion was about linguistic metaphors for time, which necessitates some common cultural and idiomatic ground which isn't necessarily shared between Dixie and Southeast Asia.

I couldn't be more impressed with the class's reaction. I learned from experience in my own English classes: if I have an interesting but different take on a subject, keep my dang mouth shut, because if I don't explain it perfectly clearly right away such that everyone agrees with me, I'll have to deal with withering scorn from my classmates. Only occasionally was said scorn verbalized ("Oh, my gawd, the book's not that deep. Okay, I get it, you're smarter than we are.") Usually the negative response was manifested in body language, subtle dismissive gestures that ostracized me. My teachers either didn't notice, didn't care, or cared but didn't know how to take action.

In John's class, though, this student's classmates tried valiantly to get his point. No one made any rude snorts or eye rolls. Even those who were generally disengaged simply remained disengaged; they did not take the opportunity to get a nonverbal jab in at the smart nerdy kid.

How did John do it? How did he establish and maintain this atmosphere of genuine intellectual curiosity among 9th grade boys?

I mean, I do it... it takes every trick and tool I've ever learned, but I do it. I pounce on any student who makes a dismissive gesture, hollering loud enough for the sewage plant down the hill to hear me. I give out candy to the first student to give me a confident yet wrong response. I set up collaborative situations in which students must work with randomized class members. I have students grade each others' work so that right and wrong answers are transparent -- it's hard to make fun of someone when you know your own wrong answers will be out there for someone else to see.

But my strengths in setting tone -- my loudness, my subject's black-and-white nature -- are not in John's toolbox. He's softspoken, teaching a subject in which shades of gray are mandatory. So how does he do it?!?

(John did share one thing had done -- a different student, John says, had a difficult attitude for a while. John realized that this other student needs to be front and center, always with something to do or say; then he can be a very positive contributor. So, when John had the class read a passage out loud, he carefully appointed this student to read a major part. That kept him involved and invested, and less likely to turn to the Dark Side.)

I know there's more to say here... I wish I could have come to class the next day, when he was planning to give specific feedback to students' writing in response to reading passage identification questions. But this should give you an idea of what I saw, what I thought about, as I observed this class to which I wish I could transport my 14 year old self. No, I wouldn't have become an English major, but that's not the point. :-)